Effort estimation — it’s complicated Link to heading

On the dilemma of evaluating software development effort Link to heading

For the past few months, estimating effort, a long-standing dilemma of the software industry, bothers me. How should we, as SW engineers, deal with the accuracy of effort estimations? The answer is complicated and has implications over developers’ career growth, having an impact on personal development. There is much at stake.

So, I felt the urge to take action. I decided to share my doubts about it over twitter.

Which led to an interesting (IMHO) debate over this subject. Below I’ll try to outline the various arguments.

No Silver Bullet, Estimation is hard. Link to heading

First, I’ll start with Michael Shpilt’s reply, saying that effort estimation is probably one of the hardest things in software, he’s right. Suggesting that to reduce the risk of failing to estimate accurately, one should be knowledgeable about the application’s domain.

Besides, the estimator should choose ahead of time who are the developers that going to participate in the required feature development. Stating that choosing the best-fitted team-members based on their codebase familiarity will help in reducing the risk.

I tend to agree on the necessity of specific knowledge in the application’s domain, as without it, things might go the wrong way; it is crucial to the success of the development.

As for choosing specific people, it is a task that should be done gently, estimations shouldn’t be based on particular people’s involvement. It may result in a lack of motivation among the team because only specified individuals get specific assignments. Feelings should be considered too, we are not machines. If you insist on proceeding with this procedure, make sure to communicate why to soften the message.

Furthermore, as broader the unknown, the higher chance a senior developer will be able to mitigate the risk. Leading to the point that inexperienced engineers won’t be able to gain knowledge about unfamiliar parts of the system. I guess the truth is somewhere in between.

Tal Joffe mentioned the excellent [talk] (https://summit2019.reversim.com/session/5c7859231503d900172c583e.html)(in Hebrew) given by Itay Maman on Reversim 2019 summit. Itay has made his slides available (🙏), which may assist for non-Hebrew speakers.

In his talk, various points are being raised, helping to lower a few of the risks mentioned above. Tal’s takeaways summarized it well. He says that the lecture’s main delivered message is the need to focus on high-level estimation.

Meaning tasks should be broken into buckets of effort — a few hours/one day/few days/more. The “More” is Tal’s version for tasks that take longer than a few days, forcing him to break them down to smaller tasks and repeat the process.

I tend to agree with him. Smaller tasks allow accurate estimates and create opportunities to share the development effort between team members, including the inexperienced engineers (and as a by-product gain knowledge and mentor them).

However, Itay has pointed out that it will only shift the estimation’s difficulty to the challenge of identifying the smaller chunks, making it work only in theory. There is no panacea here - It’s harder than you think (IHTYT).

Is #NoEstimates the answer? Link to heading

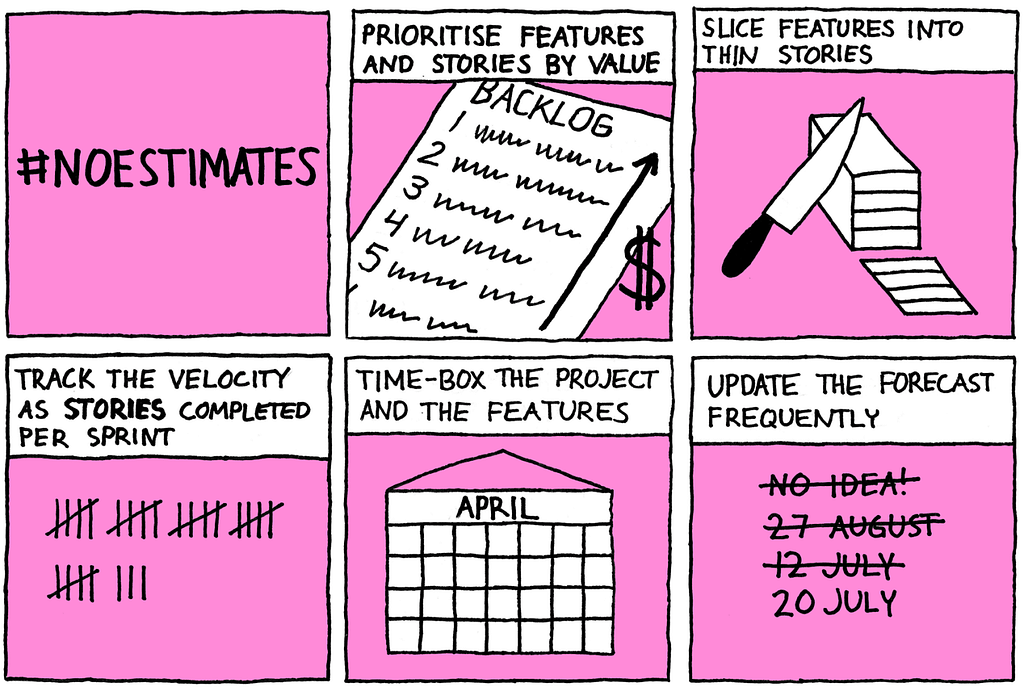

NoEstimates as summarized by Magnus Dahlgren

As stated by Gal Zellermayer, you should start with the why? It will help you understand the need, forcing you to adjust your estimation methodology accordingly.

Some are very thoughtful, others are guesstimates. Some are detailed oriented, others are high level. Maybe it is just for self-improvement, or maybe you don’t need it.

This approach is especially true when there is no baseline to compare with.

Another essential fact raised by Ophir Harran as a reply to Gal’s tweet. In his words, accepting failed estimation is vital over time to improve accuracy; otherwise, you buffer 3x to mitigate, and that cripples teams.

Couldn’t agree more on that, it may lead to the false perception that effort estimation == duration, which is the root of all evils 👹.

It creates the impression that teams should finish their development tasks based on a given number. Without considering it was an estimate (which might be inaccurate depending on the number of unknown factors). Moreover, it might start a blame game (Also mentioned by Itay) between team members on who should take responsibility for the delay\failure to deliver. Resulting in, paying the price of quality hit and surging technical debt, leading to customer dissatisfaction.

Omer Raviv joined both of them in recommending Woody Zuill’s approach, #NoEstimates. If you haven’t watched his talk, go ahead and watch it. It’s a must.

Omer said that it may sound dramatic at first. Still, it might be just a call to explore other ways to solve things without asking how long will it take while avoiding spending time overestimations.

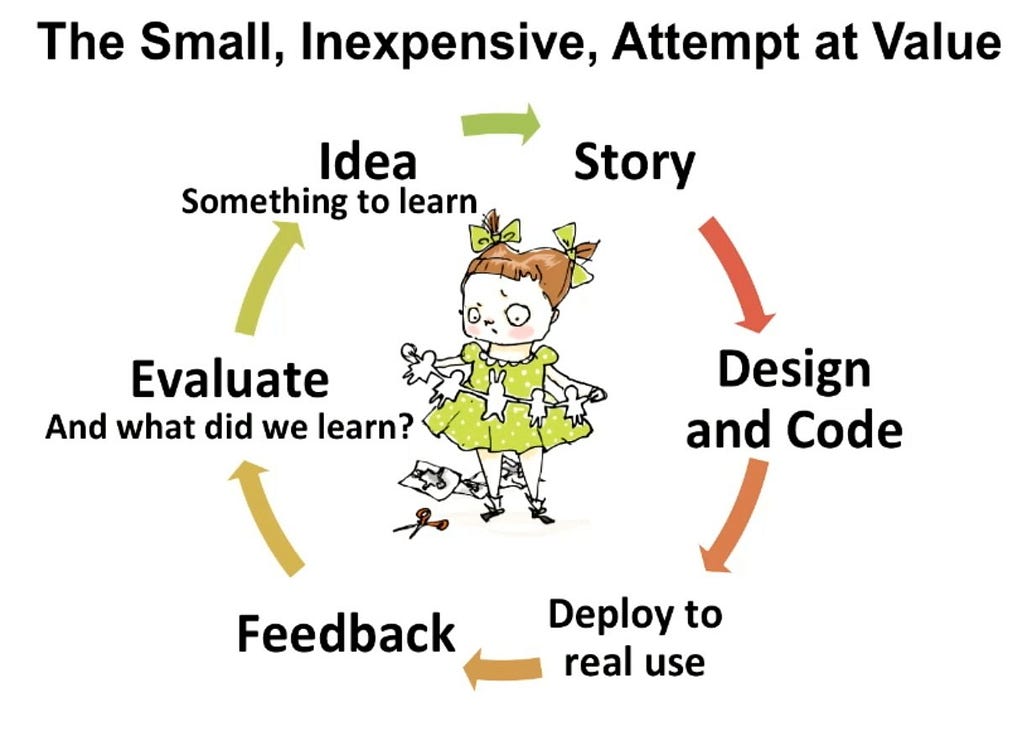

In his talk, Woody suggests to select the most essential feature, break it down into a smaller neutral piece of work, develop. Iterate till you ship it or until it is no longer critical.

NoEstimates in a nutshell

He even replied to my tweet. Explaining that in his talk, he shares how he works without estimates. But does not insist that it is the only way to work without estimates, or as Martin Fowler said in his blog:

If they are going to affect significant decisions, then go ahead and make the best estimates you can.

Overall Link to heading

My main takeaway is that as SW engineers, we should always question the practices we use, be open-minded about it. If we’ll say “It’s always been done that way”, we won’t progress. Having doubts over our work procedures will push us forward, making us a bit better every time.

This reminds me that software is made by humans, so consider it when you take any action.

AHA and, don’t assume! define metrics, measure, and then forecast.

Assumptions